Introduction to RNNs

最近在找关于deep learning的基础知识看,找到一个比较推荐的英文博客介绍,为了加深一下映象,决定翻译一遍。采取中英文对照的形式。

What are RNNs?

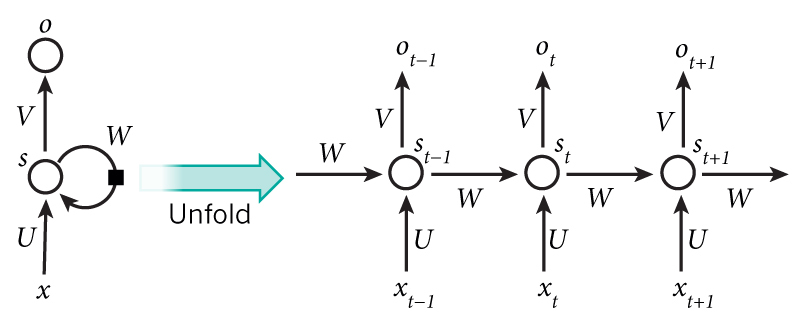

The idea behind RNNs is to make use of sequential information. In a traditional neural network we assume that all inputs (and outputs) are independent of each other. But for many tasks that’s a very bad idea. If you want to predict the next word in a sentence you better know which words came before it. RNNs are called recurrent because they perform the same task for every element of a sequence, with the output being depended on the previous computations. Another way to think about RNNs is that they have a “memory” which captures information about what has been calculated so far. In theory RNNs can make use of information in arbitrarily long sequences, but in practice they are limited to looking back only a few steps (more on this later). Here is what a typical RNN looks like:

Recurrent Neural Networks (RNNs)背后的思想是充分利用序列信息。在传统的神经网络中,我们是假设所有的输入(或者输出)之间是相互独立的。但是在一些任务中,这个假设是非常不合理的。比如,如果你想预测某个句子中的下一个词,那么最好能知道这个词的前一个词是什么。RNN之所以叫循环的(recurrent),是因为它对于序列中的每一个元素执行相同的操作,而且输出是依赖于前一步的计算。也可以从另一种角度来理解RNN,就是它拥有记忆前面所有计算得到的信息的能力。理论上,RNN可以利用任意长度序列中的信息,但是在实践中只能回溯到有限的几步。下面是一个典型的RNN图示:

Source: Nature

The above diagram shows a RNN being unrolled (or unfolded) into a full network. By unrolling we simply mean that we write out the network for the complete sequence. For example, if the sequence we care about is a sentence of 5 words, the network would be unrolled into a 5-layer neural network, one layer for each word. The formulas that govern the computation happening in a RNN are as follows:

上面的图展示了一个RNN展开成全网络的情况。通过展开,我们简单的把整个序列表示成网络形式。举个例子,如果序列的长度是5,那么整个网络会被展开成5层,每一层对应一个词。图中的参数以及一些计算公式如下:

$x_t$ is the input at time step $t$. For example, $x_1$ could be a one-hot vector corresponding to the second word of a sentence.

$s_t$ is the hidden state at time step $t$. It’s the “memory” of the network. $s_t$ is calculated based on the previous hidden state and the input at the current step: $s_t=f(Ux_t + Ws_{t-1})$. The function $f$ usually is a nonlinearity such as tanh or ReLU. $s_{-1}$, which is required to calculate the first hidden state, is typically initialized to all zeroes.

$o_t$ is the output at step $t$. For example, if we wanted to predict the next word in a sentence it would be a vector of probabilities across our vocabulary. $o_t = \mathrm{softmax}(Vs_t)$.

There are a few things to note here:

$x_t$ 是在时间步骤 $t$ 的输入。比如: $x_1$ 可能就是一个句子中的第二个词的one-hot向量。

$s_t$ 是时间步骤 $t$ 的隐含状态,也就是这个网络的“记忆”。$s_t$ 是基于前一个隐含状态和当前步骤的输入计算得到,计算公式:$s_t=f(Ux_t + Ws_{t-1})$。公式 $f$ 一般是非线性函数,比如:tanh 或者 ReLU。$s_{-1}$ 是在计算第一个隐含状态是需要的值,会被初始化为0。

$o_t$ 是步骤$t$的输出。比如,如果我们想预测一个句子中的下一个词,那么 $o_t$ 可能就是一个基于整个词汇集的概率向量。公式:$o_t = \mathrm{softmax}(Vs_t)$

下面是需要注意的几点:

You can think of the hidden state $s_t$ as the memory of the network. $s_t$ captures information about what happened in all the previous time steps. The output at step $o_t$ is calculated solely based on the memory at time $t$. As briefly mentioned above, it’s a bit more complicated in practice because $s_t$ typically can’t capture information from too many time steps ago.

我们可以把隐含状态 $s_t$ 看成是网络的记忆。$s_t$ 能获取前面所有时间步骤中的所有信息。时间步骤 $t$ 的输出 $o_t$ 只基于时间 $t$ 的记忆计算得到。就像上面提到的,实际操作中会更加复杂,因为 $s_t$ 并不能获得太多时间步骤前的信息。

Unlike a traditional deep neural network, which uses different parameters at each layer, a RNN shares the same parameters ($U$, $V$, $W$ above) across all steps. This reflects the fact that we are performing the same task at each step, just with different inputs. This greatly reduces the total number of parameters we need to learn.

不像传统的深度神经网络那样在每层都采用不同的参数,RNN在所有步骤中都是用的相同的参数集(上图中的$U$, $V$, $W$)。这反映的事实就是我们在每步都进行了相同的操作,只是每步的输入不一样。这使得要学习的参数数量大大降低。

The above diagram has outputs at each time step, but depending on the task this may not be necessary. For example, when predicting the sentiment of a sentence we may only care about the final output, not the sentiment after each word. Similarly, we may not need inputs at each time step. The main feature of an RNN is its hidden state, which captures some information about a sequence.

上图中每个步骤都有输出,但是基于不同的任务并不是每个步骤都需要输出的。比如在做句子情感分析的时候,我们只关心最终的输出,而不是每个词的情感分析结果。类似的,我们也不需要每个步骤都有输入。RNN的一个主要特征就是隐含状态,这样能够获得整个序列的某些信息。

What can RNNs do?

RNNs have shown great success in many NLP tasks. At this point I should mention that the most commonly used type of RNNs are LSTMs, which are much better at capturing long-term dependencies than vanilla RNNs are. But don’t worry, LSTMs are essentially the same thing as the RNN we will develop in this tutorial, they just have a different way of computing the hidden state. We’ll cover LSTMs in more detail in a later post. Here are some example applications of RNNs in NLP (by non means an exhaustive list).

RNN已经在很多的NLP任务中取得了成功。最常用的RNN是LSTM,它比获取长依赖信息上比vanilla RNNs表现更好。但是,LSTM和我们想建立的RNN模型实际上是一样的,只是用了不同的方法来计算隐含状态。我们会在后面讨论LSTM的更多细节。下面是RNN在NLP领域上应用的例子。

Language Modeling and Generating Text

Given a sequence of words we want to predict the probability of each word given the previous words. Language Models allow us to measure how likely a sentence is, which is an important input for Machine Translation (since high-probability sentences are typically correct). A side-effect of being able to predict the next word is that we get a generative model, which allows us to generate new text by sampling from the output probabilities. And depending on what our training data is we can generate all kinds of stuff. In Language Modeling our input is typically a sequence of words (encoded as one-hot vectors for example), and our output is the sequence of predicted words. When training the network we set $o_t = x_{t+1}$ since we want the output at step $t$ to be the actual next word.

语言建模和文本生成 给定一个词序列,我们想预测每个词出现在它前一个词后面的概率。语言模型可以让我们能够估算一个句子有多像一个正常的句子(或者说符合语法、符合自然语言的句子),这在机器翻译中是十分重要的(因为更高的可能性指正着更高的准确率)。预测下一个词的功能带来的另一个功能就是我们也可以得到一个生成模型,使得我们可以根据输出概率来生成新的文本。而且,根据我们的训练数据的不同,可以生成各种各样的文本素材。在语言模型中,我们的输入通常是词序列(比如:编码成one-hot的向量),输出就是预测词的序列。在训练整个网络的时候,我们设置 $o_t = x_{t+1}$ ,因为我们想得到步骤 $t$ 的输出就是下一个词。

Research papers about Language Modeling and Generating Text:

关于语言建模和文本生成的文章:

- Recurrent neural network based language model

- Extensions of Recurrent neural network based language model

- Generating Text with Recurrent Neural Networks

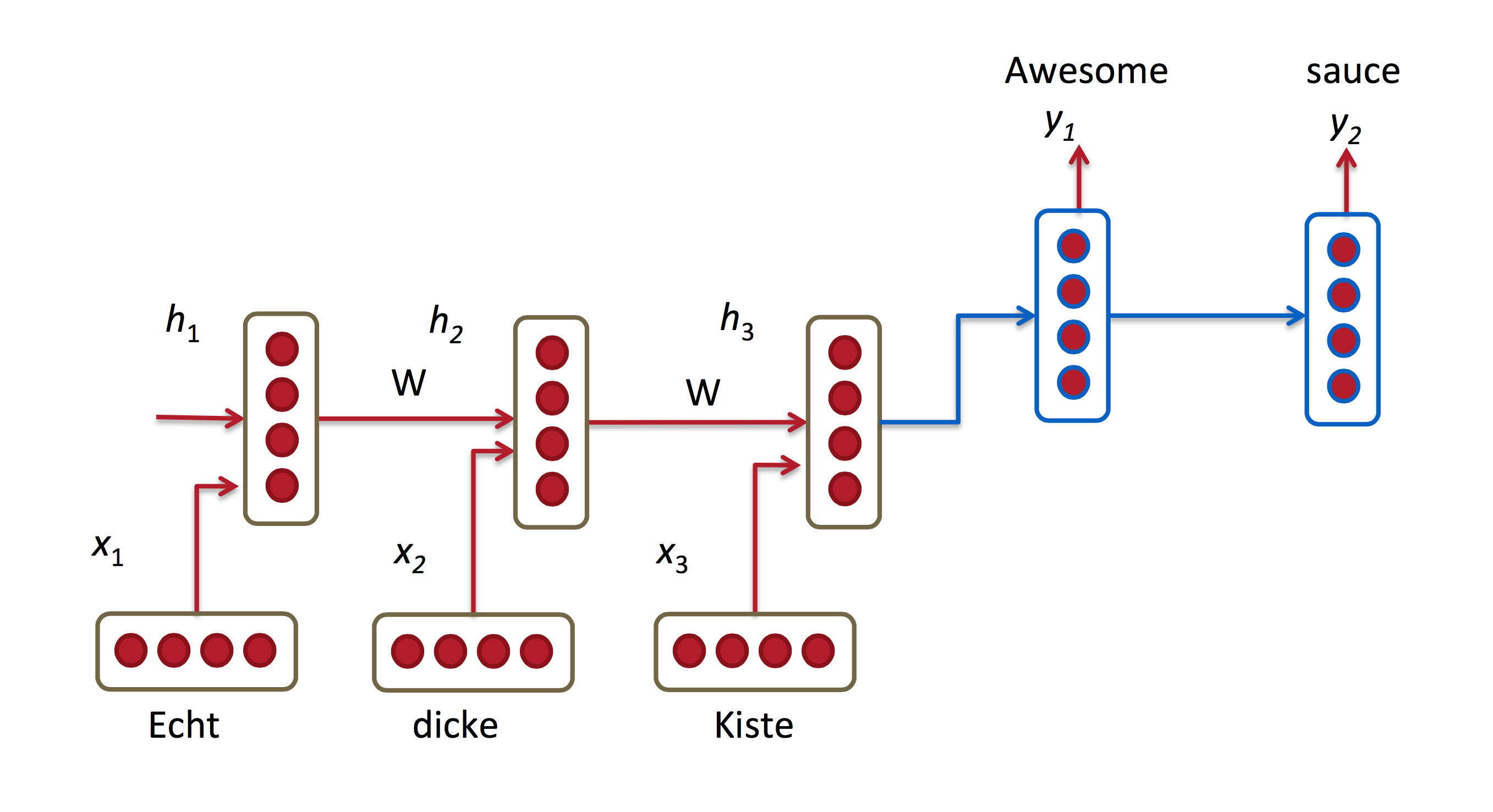

Machine Translation

Machine Translation is similar to language modeling in that our input is a sequence of words in our source language (e.g. German). We want to output a sequence of words in our target language (e.g. English). A key difference is that our output only starts after we have seen the complete input, because the first word of our translated sentences may require information captured from the complete input sequence.

机器翻译 和语言建模比较类似。只是在机器翻译中,我们的输入是源语言(比如:德语)的词序列,而我们想得到的输出是目标语言(比如:英语)的词序列。关键的不同在于我们的输出是在看了所有的输入之后进行,因为翻译的句子的第一个词有可能需要整个输入序列的信息。

Source: http://cs224d.stanford.edu/lectures/CS224d-Lecture8.pdf

Research papers about Machine Translation:

关于机器翻译的文章:

- A Recursive Recurrent Neural Network for Statistical Machine Translation

- Sequence to Sequence Learning with Neural Networks

- Joint Language and Translation Modeling with Recurrent Neural Networks

Speech Recognition

Given an input sequence of acoustic signals from a sound wave, we can predict a sequence of phonetic segments together with their probabilities.

语音识别 给定从声波中得到的声形信号序列,预测语音片段序列,以及概率。

Research papers about Speech Recognition:

关于语音识别的文章:

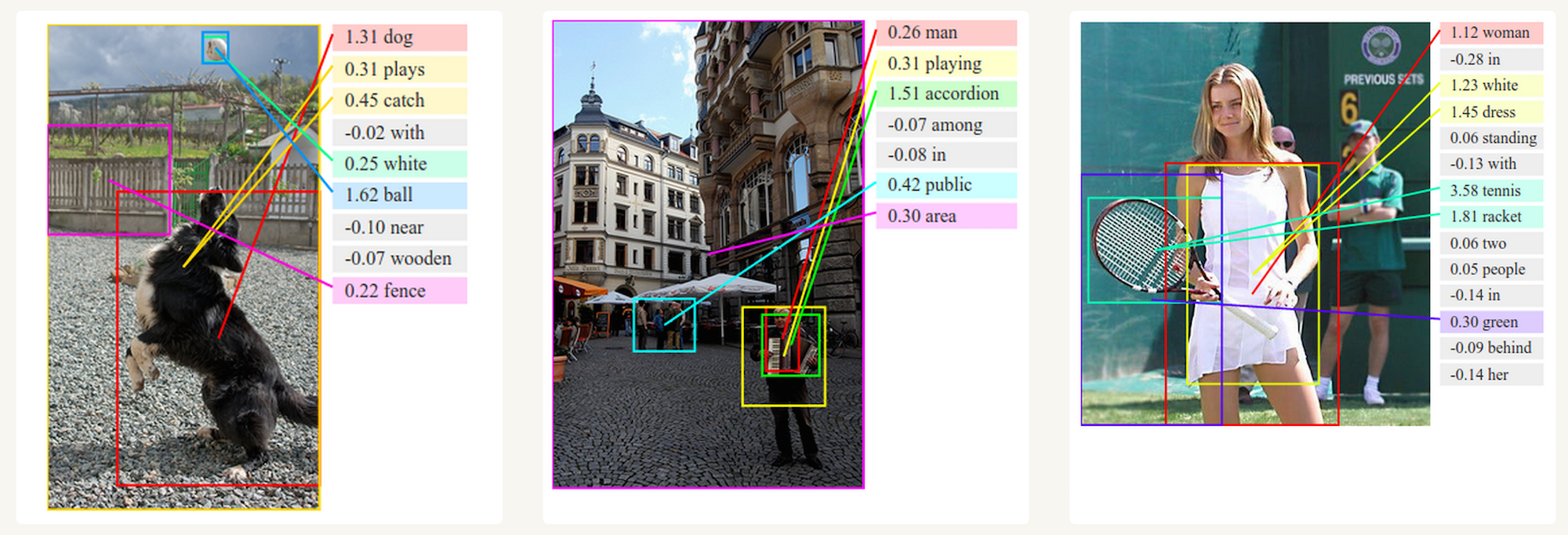

Generating Image Descriptions

Together with convolutional Neural Networks, RNNs have been used as part of a model to generate descriptions for unlabeled images. It’s quite amazing how well this seems to work. The combined model even aligns the generated words with features found in the images.

生成图片描述 结合卷积神经网络,RNN开始被作为未标注图片的描述生成模型的一部分,而且取得令人惊喜的结果。这个联合模型甚至可以把生成的文字和找到的图片特征整合起来。

Source: http://cs.stanford.edu/people/karpathy/deepimagesent/

Training RNNs

Training a RNN is similar to training a traditional Neural Network. We also use the backpropagation algorithm, but with a little twist. Because the parameters are shared by all time steps in the network, the gradient at each output depends not only on the calculations of the current time step, but also the previous time steps. For example, in order to calculate the gradient at t=4 we would need to backpropagate 3 steps and sum up the gradients. This is called Backpropagation Through Time (BPTT). If this doesn’t make a whole lot of sense yet, don’t worry, we’ll have a whole post on the gory details. For now, just be aware of the fact that vanilla RNNs trained with BPTT have difficulties learning long-term dependencies (e.g. dependencies between steps that are far apart) due to what is called the vanishing/exploding gradient problem. There exists some machinery to deal with these problems, and certain types of RNNs (like LSTMs) were specifically designed to get around them.

训练RNN模型与训练传统神经网络模型差不多,都是使用反向传播算法,但是会有点曲折。因为参数是共享的,所以每个输出的梯度不仅取决于当前步骤的计算,也取决于前面步骤的。比如,为了计算t=4这个时刻的梯度,需要反向传播3步,求梯度之和,这也叫做“Backpropagation Through Time (BPTT)”。如果上面的描述还令你有点困惑也不用担心,之后会有一篇详细的说明。现在只需要清楚,因为存在梯度消失和梯度爆炸的问题,用BPTT训练的vanilla RNNs模型在学习长距离依赖(比如:相距很远的步骤间的依赖)时是有困难的。当然,现在也有一些机制来解决这些问题,也有像LSTM这样类型的RNN模型专门来避开这个问题的。

RNN Extensions

Over the years researchers have developed more sophisticated types of RNNs to deal with some of the shortcomings of the vanilla RNN model. We will cover them in more detail in a later post, but I want this section to serve as a brief overview so that you are familiar with the taxonomy of models.

这么多年来,研究人员已经开发了更多复杂的RNN来解决vanilla RNN中存在的不足。我们会在后续的文章中详细介绍,下面只是简单的介绍一下,让大家有个印象。

Bidirectional RNN

Bidirectional RNNs are based on the idea that the output at time t may not only depend on the previous elements in the sequence, but also future elements. For example, to predict a missing word in a sequence you want to look at both the left and the right context. Bidirectional RNNs are quite simple. They are just two RNNs stacked on top of each other. The output is then computed based on the hidden state of both RNNs.

双向RNNs基于的主要思想是t时刻的输出不仅取决于序列中前面的要素,也要考虑后面的要素。举个例子,在预测句子中的缺失词时,就要同时考虑上下文。双向RNN也比较简单,只是两层RNN相互堆叠,然后输出基于两个RNN的隐含层计算得到。

Deep Bidirectional RNN

Deep (Bidirectional) RNNs are similar to Bidirectional RNNs, only that we now have multiple layers per time step. In practice this gives us a higher learning capacity (but we also need a lot of training data).

深度(双向)RNNs和双向RNNs类似,只是每个时间步骤有多层。实践中会有更高的学习能力,同时也需要更多的训练数据。

LSTM networks

LSTM networks are quite popular these days and we briefly talked about them above. LSTMs don’t have a fundamentally different architecture from RNNs, but they use a different function to compute the hidden state. The memory in LSTMs are called cells and you can think of them as black boxes that take as input the previous state $h_{t-1}$ and current input $x_t$. Internally these cells decide what to keep in (and what to erase from) memory. They then combine the previous state, the current memory, and the input. It turns out that these types of units are very efficient at capturing long-term dependencies. LSTMs can be quite confusing in the beginning but if you’re interested in learning more this post has an excellent explanation.

LSTM网络现在应用十分广泛,上面也简单提到过。LSTM从根本上来说和RNN并没有不同,只是用了不同的函数来获得隐含状态。LSTM中的记忆单元叫做cell,可以把它想象成一个黑盒,这个黑盒的输入是前面的状态$h_{t-1}$和当前的输入$x_t$。在cell的内部决定哪些信息要被保留,哪些信息要被丢弃。然后把前面的状态、当前的记忆以及输入结合起来。实践证明,这种单元在获取长距离的依赖时十分有效。刚开始,你可能会觉得LSTM十分令人困惑,但是如果你有兴趣了解更多,后续的文章会有很好的解释说明。

Conclusion

So far so good. I hope you’ve gotten a basic understanding of what RNNs are and what they can do. In the next post we’ll implement a first version of our language model RNN using Python and Theano. Please leave questions in the comments!

到此为止,我相信你们对RNN是什么,以及RNN能做什么有个大致的了解了。下一篇文章我们将利用Python和Theano完成第一个版本的RNN语言模型。欢迎提问!

本文标题: Introduction to RNNs

原始链接: https://oyeblog.com/2017/introduction_to_rnn/

发布时间: 2017年10月11日 - 19时11分

最后更新: 2023年10月22日 - 15时06分

版权声明: 本站文章均采用CC BY-NC-SA 4.0协议进行许可。转载请注明出处!